SQL Sentry Event Manager uses a system of conditions and actions for handling events. Basically, you define one or more actions to be taken as the result of a pre-defined condition being met. There are over a hundred pre-defined conditions that range from a SQL Server Agent job failing to a runtime threshold being exceeded for a T-SQL query, to the minimum value threshold for a performance counter being broken. Applicable actions can then be taken once the condition is met. Whether a condition supports a specific action really just depends on whether or not that action makes sense in that context. For example, “Run QuickTrace” is not an action for the “SQL Server: Deadlock” condition, because when a deadlock is detected the event has already occurred, and the QuickTrace would not contain anything relevant.

Two of the actions that are available for just about every action though, are “Execute Process” and “Execute SQL”. These two actions will allow you to execute either a Windows process, or a T-SQL command sequence on a specified server that is watched by SQL Sentry when the associated condition is met. This can be useful for several reasons, but what makes them even better is that ability to pass all of or specific parts of the event data as parameters to be used by that process or T-SQL batch.

A great use for the above feature is in automatic generation of technical incident records for ticketing systems. You can monitor for an event, and have it sent directly to your ticketing system for review by front line technicians providing all the information they need to make decisions without ever touching a keyboard, or even being present for that matter. For the rest of this post, I’ll go over a way to do just that, and hopefully it will spark some good ideas for anyone reading.

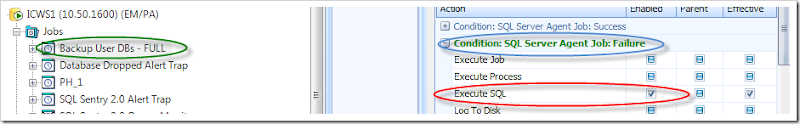

I’m going to assume that we’re already monitoring the server with SQL Sentry Event Manager, so from this point we want to choose a good candidate condition. One that is pretty common for a situation like this is “SQL Server: Agent Job Failure.” This condition is met when a SQL Server Agent job that is watched by SQL Sentry Event Manager logs a failure. We’ll enable the “Execute SQL” action for a backup job, as illustrated below.

At this point we’ve got everything enabled, and the SQL Sentry service will attempt to execute a T-SQL command batch whenever this job fails. We do still have something extremely important missing though, and that is what T-SQL to run, and where to run it.

We want to not only call a stored procedure that will create a support ticket, but also pass information from the event that can be used by a technician to locate and resolve the problem. In addition, we want to pass in a custom value of our own that will help the ticketing system classify and prioritize this incident.

The SQL Sentry feature that is going to allow us to do all of this is called “Execute Action Parameters.” You can read our documentation on this feature in our online help here: http://www.sqlsentry.net/help/ug/?System_Parameters.html, but we’re also going to spend some time setting this up and writing some code below.

For the purpose of this post, we’re going to keep it simple. I’ve created a small database called “Ticketing” and added the following table definition:

CREATE TABLE Tickets

(

TicketID int NOT NULL identity(1,1)

CONSTRAINT PK_Tickets_TicketID

PRIMARY KEY CLUSTERED (TicketID),

Title nvarchar(128) NOT NULL,

[Description] nvarchar(2000) NOT NULL,

ClassCD nchar(3) NOT NULL,

PriorityHint tinyint NOT NULL

)

Obviously, a real ticketing system will require more information, but the basics are here, as well as two columns (ClassCD and PriorityHint) we can use to demonstrate custom user parameters.

I will also use the following stored procedure to populate the Ticketing table:

USE Ticketing;

GO

CREATE PROC InsertTicket

@Title nvarchar(128),

@Description nvarchar(2000),

@ClassCD nchar(3),

@PriorityHint tinyint = 0

AS

INSERT INTO [Ticketing].[dbo].[Tickets]

([Title]

,[Description]

,[ClassCD]

,[PriorityHint])

VALUES

(@Title

,@Description

,@ClassCD

,@PriorityHint)

GO

To this point it’s all very straight forward. We have a data table for storing ticketing data, and a simple stored procedure for populating it.

The interesting part comes when I define the T-SQL to run for my Execute SQL action. Below I will list the raw T-SQL as well as what it should look like in the actions configuration area of the SQL Sentry console. Then I will explain the special elements we see in the code and what they mean.

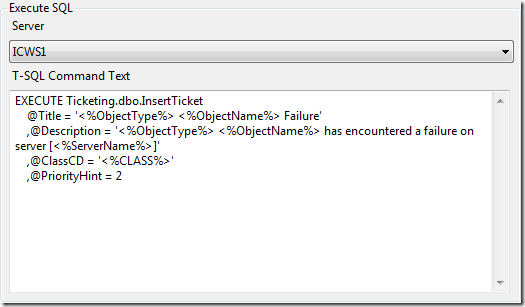

Entering the T-SQL command for the Execute SQL command is pretty easy. It’s done in the same area of the console application that was used to enable the “Execute SQL” action.

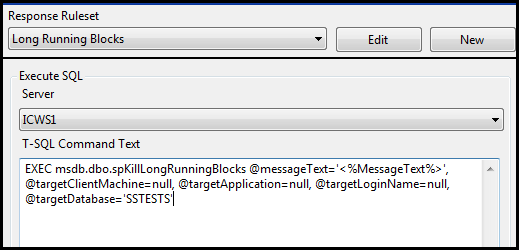

As seen below, you can select which server to execute the command on, and enter the command itself. Remember that only those servers that are monitored by SQL Sentry Event Manager will be listed in the “Server” drop-down.

I’m going to select my local server, since that’s really the only one I am monitoring right now, and enter the following for my “T-SQL Command Text”.

EXECUTE Ticketing.dbo.InsertTicket

@Title = '<%ObjectType%> <%ObjectName%> Failure'

,@Description = '<%ObjectType%> <%ObjectName%> has encountered a failure on server [<%ServerName%>]'

,@ClassCD = '<%CLASS%>'

,@PriorityHint = 2

At first glance, this is just a normal execute statement, but there are some interesting elements within the string literals that go along with event processing in SQL Sentry, and deserve some additional explanation.

First, any time you see the following format <%…%> in this context, it is telling SQL Sentry that we want to pass some part of the event data as a parameter instead of just keeping the literal text. Take for instance “<%ObjectName%>.” Before this command is executed, any instance of “<%ObjectName%>” will be replaced with the name of the object that caused the event. In this case it will be our backup job “Backup User DBs – FULL.” These parameters are all self-explanatory of what information they will inject into the command, except for one. The “<%CLASS%>” parameter is special. The “<%CLASS%>” parameter is what we call a “User Parameter”, and I will go into more detail about that in a bit.

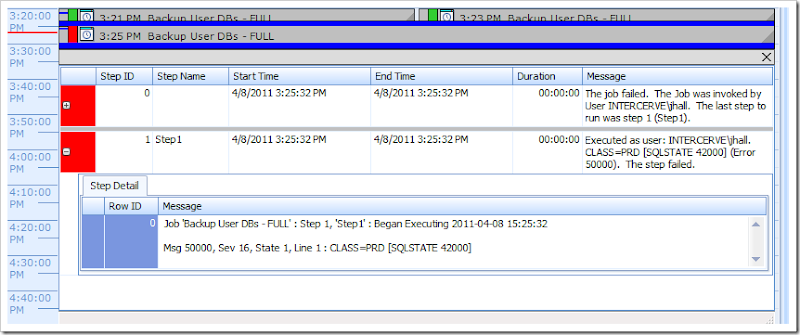

So, given this command, when my backup job fails, I should get a row in the Tickets table telling me that I need to investigate this tragic event. Now I’ll force the job to fail, and we can see it on the SQL Sentry event calendar, and I’ll also list the contents of the Tickets table.

Above you can see the job failure and the detail for the steps in the job. This job simply threw an error with the message “CLASS=PRD.” That really doesn’t mean much, but it becomes important when we look at the user parameter in a bit. Now let’s see what we end up with in the Tickets table below:

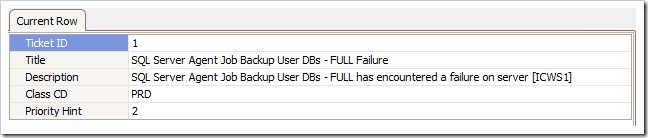

My execute action parameters were replaced by meaningful information about the event. I know what job failed, and what server it failed on, and I can go investigate what happened using my SQL Sentry calendar view.

If this were a database for a real ticketing system, I’m sure several members of the technical staff would have seen it, and it would be classified and prioritized. Notice the “Class CD” which I mean to stand for “Class Code” and “Priority Hint” which I intend to be used to hint at the priority level.

Let’s assume that “PRD” stands for “Production” in a list of codes somewhere, and that “2” is hinting at a priority of 2. You can see how I could send in a custom parameter from my event output, or just a literal value, such as the priority hint.

The error I raised in the job step is where I got the ClassCD from. I could just as easily have done this with a code comment, print statement, or other mechanism since all I need for this to work is for the NAME=VALUE (in this case CLASS=PRD) set to be somewhere in the output of the event. I put it in the error message here because I knew I was going to make the job fail, and it was just quicker. It’s really just a T-SQL RAISERROR statement. I have it listed below:

RAISERROR ('CLASS=PRD', 16, 1);

This is the user parameters feature that I was discussing earlier. I could embed any number of these into the output of an event, and use them to control processing of the specialized event data upon generating the support ticket. All that is required is that NAME=VALUE pair. Your parameter name will be in the following format: <%NAME%>. In my case it was <%CLASS%>.