A customer recently came across a problem in which a third-party system was causing long block chains on one of his servers. The best solution he could come up with was really just to kill the blocking SPID when this happens. Knowing that SQL Sentry monitors for blocks and tracks their duration, he asked if there was a way to automate killing the blocking SPID after a specified duration.

This is not something that is built into SQL Sentry as standard functionality, but with some creative thought we were able to come up with a solution using existing SQL Sentry functionality combined with some out-of-band use of one of the remote procedures SQL Sentry employs on a monitored server.

First and foremost we need a way to ID the blocking SPIDs at the head of any block chains. We also need a way to target only the long running blocks, not all of them.

On every SQL Sentry Performance Advisor monitored server, a few utility objects are created in the MSDB database. These are used to reduce network chatter between the SQL Sentry server and monitored SQL Servers. One of these objects is called “spGetBlockInfo_20” (the _20 is just a versioning mechanism, please don’t get stuck on it). This is a stored procedure that can provide us with information about blocked and blocking processes and includes useful information like the wait time, database name, time of last batch, login name and plenty of other things that I’ll skip for brevity. It is the same mechanism that provides raw block information to the '”Blocking SQL” tab in SQL Sentry Performance Advisor.

My first instinct was to write a simple procedure that will load the block information into a temp table and use a cursor to build a dynamic kill statement, finally executing the statement with sp_executesql. It would have one parameter that simply specified the number of minutes since the last batch to use for block duration. This would be done without the cursor due to the original intention to kill multiple blocks, and it could possibly go that way again some day, so it was left as a cursor for now. The first cut of this is below:

First Draft

CREATE PROCEDURE dbo.spKillLongRunningBlocks

@minutesSinceLastBatch int

AS

CREATE TABLE #tmp

(

spid smallint,

blocked smallint,

waittype binary(2),

waittime int,

waitresource nvarchar(256),

dbname sysname,

last_batch datetime,

ecid smallint,

hostname nchar(128),

[program_name] nchar(128),

loginame nchar(128),

cmdtext ntext,

waittext nvarchar(1000)

);

INSERT #tmp

EXEC spGetBlockInfo_20;

DECLARE cspids CURSOR FAST_FORWARD FOR

SELECT spid, last_batch

FROM #tmp

WHERE #tmp.blocked = 0

AND DATEDIFF(minute, #tmp.last_batch, GETDATE()) >= @minutesSinceLastBatch;

DECLARE @spid int;

DECLARE @lastBatch datetime;

DECLARE @killCmd nvarchar(2000);

OPEN cspids;

FETCH NEXT FROM cspids INTO @spid, @lastBatch;

SET @killCmd = '';

IF @@FETCH_STATUS = 0

BEGIN

SET @killCmd = @killCmd + 'KILL ' + CAST(@spid AS nvarchar(5)) + ';';

EXEC sp_executesql @killCmd;

END;

CLOSE cspids;

DEALLOCATE cspids;

DROP TABLE #tmp;

This worked pretty well actually, until I ran the procedure code by the product architect for SQL Sentry Performance Advisor Greg Gonzalez who quickly pointed out that for RPC processes that last_batch field can often show up as ‘01/01/1900’. This would create a situation where we might kill the wrong processes, so I had to find another way to determine the duration. So, after a bit of though I concluded that the process with the highest wait time and the SPID in question as it’s blocker should provide the most accurate duration. Luckily, “waittime” is already part of the output of “spGetBlockInfo_20”. It is in milliseconds, so I had to multiply the input by 60 then by 1000 to convert it for comparison. This next version includes this change on lines 26-28:

Edited for Duration Accuracy

CREATE PROCEDURE dbo.spKillLongRunningBlocks

@minutesSinceLastBatch int

AS

CREATE TABLE #tmp

(

spid smallint,

blocked smallint,

waittype binary(2),

waittime int,

waitresource nvarchar(256),

dbname sysname,

last_batch datetime,

ecid smallint,

hostname nchar(128),

[program_name] nchar(128),

loginame nchar(128),

cmdtext ntext,

waittext nvarchar(1000)

);

INSERT #tmp

EXEC spGetBlockInfo_20;

DECLARE cspids CURSOR FAST_FORWARD FOR

SELECT spid, last_batch

FROM #tmp

WHERE #tmp.blocked = 0

AND (SELECT MAX(t2.waittime)

FROM #tmp t2

WHERE t2.blocked = #tmp.spid) > CAST((@minutesSinceLastBatch * 60) * 1000 as int);

DECLARE @spid int;

DECLARE @lastBatch datetime;

DECLARE @killCmd nvarchar(2000);

OPEN cspids;

FETCH NEXT FROM cspids INTO @spid, @lastBatch;

SET @killCmd = '';

IF @@FETCH_STATUS = 0

BEGIN

SET @killCmd = @killCmd + 'KILL ' + CAST(@spid AS nvarchar(5)) + ';';

EXEC sp_executesql @killCmd;

END;

CLOSE cspids;

DEALLOCATE cspids;

DROP TABLE #tmp;

We need the most accurate solution possible. After all, we are forcibly ending running processes sight unseen on our DBMS server. With that in mind I ran the procedure by architect and lead developer Brooke Philpott. Transforming the raw block data from “spGetBlockInfo_20” into what you can see in the Blocking SQL tab in Performance Advisor is a very complicated process. We can’t assume that all blocks that may still be active after the threshold is met are supposed to be killed. We didn’t have to discuss this very long for me to buy in to the fact that we needed to narrow the margin of error a bit more.

SQL Sentry Event Manager has a feature called Execute Action Parameters. This feature allows one to pass all or parts of the SQL Sentry notification message in as a parameter to the target of an Execute SQL or Execute Process action. For the purpose here, it was easiest to pass in the full message an execute command similar to the following:

EXEC msdb.dbo.spKillLongRunningBlocks '<%MessageText%>'

The standard “blocking detected” message from SQL Sentry contains something very important in making this work. It lists the entire block chain from top to bottom. A simple example is pasted below:

Example: SQL Sentry Blocking Detected Message

[Connection]: ICWS1

[Message]: SQL Server Blocking Detected

[Start Time]: 2/24/2010 2:07:46 PM

[Duration]: 57 minutes, 27 seconds

[Minimum Duration Threshold]: 5 seconds

------------------------------------------------------------------------

[Timestamp (Local)]: 2/24/2010 3:05:14 PM

[Timestamp (UTC)]: 2/24/2010 8:05:14 PM

[Generated By]: SQL Sentry 5.5 Server [ICWS1]

[Version]: 5.5.20.0

[Monitor Type]: SqlServerBlockDetector

[Condition]: SQL Server: Blocking SQL

[Response Ruleset]: Long Running Blocks

------------------------------------------------------------------------

SPID at Head of Blocking Chain:

SPID [ecid]: 81 [0]

Client Machine: ICWS1

Application: SQL Server Agent: Job 'BLOCK_TESTA'

Login Name: NT AUTHORITY\NETWORK SERVICE

Last Batch: 2/24/2010 2:07:41 PM

Wait Type: WAITFOR

Wait Time: 00:00:00

Database: SSTESTS

Command Text:

WAITFOR DELAY '00:00:01'

------------------------------------------------------------------------

Blocked SPID:

SPID [ecid]: 82 [0]

Blocked By SPID: 81

Client Machine: ICWS1

Application: SQL Server Agent: Job 'BLOCK_TESTB'

Login Name: NT AUTHORITY\NETWORK SERVICE

Last Batch: 2/24/2010 2:07:46 PM

Wait Type: LCK_M_RS_S

Wait Time: 00:57:27

Database: SSTESTS

Command Text:

SELECT DISTINCT *

FROM TestTable ORDER BY ID DESC

------------------------------------------------------------------------

All of the information necessary to narrow the selection down to the exact SPID we want to kill is in there. All that is needed from this point is some creative TSQL string parsing and type conversion. In the final version of the procedure I’ve early on where we’re parsing the blocking SPID, client machine, login name and database name from the message text. Since technically the SPID number could have been reused by this time, we need a way to ID more values than just SPID number. With all of these values from the message text we can compare using a composite key to identify a specific block. This fully removes the need to test for any wait time at all, placing that burden back on the SQL Sentry server service where it really belongs. As a result, two things happen. First, the part of the where clause in the cursor that constrains the duration is replaced with a final check to make sure there are still processes being blocked by this SPID. Secondly, we no longer have to specify the number of minutes as a parameter.

Some other important improvements in this version include restricting the SPID query to non-system SPIDs (>50). Imagine the horror when you realize that your automated process is killing important work that SQL Server is trying to do. What’s worse, imagine the emails and phone calls. Yikes! I’ve also included some defaulted parameters that can be used to target specific applications, client machines, logins and/or databases. They are null by default, but are useful for targeting only certain blocks instead of everything across the board.

Edited for Composite Key

CREATE PROCEDURE dbo.spKillLongRunningBlocks

@messageText nvarchar(2000),

@targetClientMachine nvarchar(128) = null,

@targetApplication nvarchar(128) = null,

@targetLoginName nvarchar(128) = null,

@targetDatabase nvarchar(128) = null

AS

--PRINT @messageText;

DECLARE @beforeSpidConstant nvarchar(25);

DECLARE @beforeClientMachineConstant nvarchar(25);

DECLARE @beforeApplicationConstant nvarchar(25);

DECLARE @beforeLoginNameConstant nvarchar(25);

DECLARE @beforeDatabaseConstant nvarchar(25);

SET @beforeSpidConstant = 'SPID [ecid]: ';

SET @beforeClientMachineConstant = 'Client Machine: ';

SET @beforeLoginNameConstant = 'Login Name: ';

SET @beforeDatabaseConstant = 'Database: ';

DECLARE @spidLoc int;

DECLARE @clientMachineLoc int;

DECLARE @loginNameLoc int;

DECLARE @databaseLoc int;

DECLARE @targetSpid nvarchar(5);

SELECT @spidLoc = CHARINDEX(@beforeSpidConstant, @messageText);

SELECT @clientMachineLoc = CHARINDEX(@beforeClientMachineConstant, @messageText);

SELECT @loginNameLoc = CHARINDEX(@beforeLoginNameConstant, @messageText);

SELECT @databaseLoc = CHARINDEX(@beforeDatabaseConstant, @messageText);

SELECT @targetSpid =

SUBSTRING(@messageText,

@spidLoc + LEN(@beforeSpidConstant),

(CHARINDEX(' ', @messageText, @spidLoc + LEN(@beforeSpidConstant))) - (@spidLoc + LEN(@beforeSpidConstant)));

IF @targetClientMachine IS NULL

BEGIN

SELECT @targetClientMachine =

RTRIM(SUBSTRING(@messageText,

@clientMachineLoc + LEN(@beforeClientMachineConstant),

(CHARINDEX(CHAR(13) + char(10), @messageText, @clientMachineLoc + LEN(@beforeClientMachineConstant))) - (@clientMachineLoc + LEN(@beforeClientMachineConstant))));

END

IF @targetLoginName IS NULL

BEGIN

SELECT @targetLoginName =

RTRIM(SUBSTRING(@messageText,

@loginNameLoc + LEN(@beforeLoginNameConstant),

(CHARINDEX(CHAR(13) + char(10), @messageText, @loginNameLoc + LEN(@beforeLoginNameConstant))) - (@loginNameLoc + LEN(@beforeLoginNameConstant))));

END

IF @targetDatabase IS NULL

BEGIN

SELECT @targetDatabase =

RTRIM(SUBSTRING(@messageText,

@databaseLoc + LEN(@beforeDatabaseConstant),

(CHARINDEX(CHAR(13) + char(10), @messageText, @databaseLoc + LEN(@beforeDatabaseConstant))) - (@databaseLoc + LEN(@beforeDatabaseConstant))));

END

--PRINT @targetSpid;

--PRINT @targetClientMachine;

--PRINT @targetLoginName;

--PRINT @targetDatabase;

CREATE TABLE #tmp

(

spid smallint,

blocked smallint,

waittype binary(2),

waittime int,

waitresource nvarchar(256),

dbname sysname,

last_batch datetime,

ecid smallint,

hostname nchar(128),

[program_name] nchar(128),

loginame nchar(128),

cmdtext ntext,

waittext nvarchar(1000)

);

INSERT #tmp

EXEC spGetBlockInfo_20;

DECLARE cspids CURSOR FAST_FORWARD FOR

SELECT

spid,

hostname,

[program_name],

loginame,

dbname

FROM #tmp

WHERE #tmp.blocked = 0

AND spid > 50 --Don't kill system SPIDs!!!

AND (SELECT COUNT(t2.spid)

FROM #tmp t2

WHERE t2.blocked = #tmp.spid) > 0;

DECLARE @spid smallint;

DECLARE @clientMachine nvarchar(128);

DECLARE @application nvarchar(128);

DECLARE @loginName nvarchar(128);

DECLARE @database sysname;

DECLARE @killCmd nvarchar(50);

OPEN cspids;

FETCH NEXT FROM cspids INTO @spid, @clientMachine, @application, @loginName, @database;

SET @killCmd = '';

IF @@FETCH_STATUS = 0

BEGIN

--PRINT @targetClientMachine + ' = ' + @clientMachine

--PRINT @targetApplication + ' = ' + @application

--PRINT @targetLoginName + ' = ' + @loginName

--PRINT @targetDatabase + ' = ' + CAST(@database AS nvarchar(128))

IF(CAST(@targetSpid as int) = @spid

AND @targetClientMachine = RTRIM(@clientMachine)

--Application may not always match, so only use it if it was passed in

AND isnull(@targetApplication,RTRIM(@application)) = RTRIM(@application)

AND @targetLoginName = RTRIM(@loginName)

AND @targetDatabase = CAST(@database AS nvarchar(128)))

BEGIN

SET @killCmd = @killCmd + 'KILL ' + CAST(@spid AS nvarchar(5)) + ';';

EXEC sp_executesql @killCmd;

END

END;

CLOSE cspids;

DEALLOCATE cspids;

DROP TABLE #tmp;

So now that we have a mechanism in place to kill the process at the head of any long running block chain, we need a way to trigger it. At first we wanted to use the “Max Runtime Threshold” condition in SQL Sentry Performance Advisor for SQL Server Blocking, but this condition only fires when the block has finished, and it doesn’t include all the items that we want from the message text. We could use the “SQL Server: Blocking SQL” condition since it gets fired as soon as any block that meets the collection threshold is detected, but we really only want this to be triggered after a specified amount of time. The solution lies in Response Rulesets. Using a response ruleset we can use the standard Blocking SQL condition with the Execute SQL action in SQL Sentry, and only allow the action to be performed when a specified amount of time has passed. Since response rulesets can be set for each action (as opposed to each condition) we can still send an email, or save a database log of every single block detected, but only execute our kill procedure when enough time has elapsed since the block was first detected.

First, enable the condition for the server where our kill procedure is placed (you can do this globally as well, but you want to make certain the kill procedure exists on every monitored server in that case):

Enable the Execute SQL Action for the Blocking SQL Condition

Note: Learn more about SQL Sentry conditions and actions here: SQL Sentry Training Videos

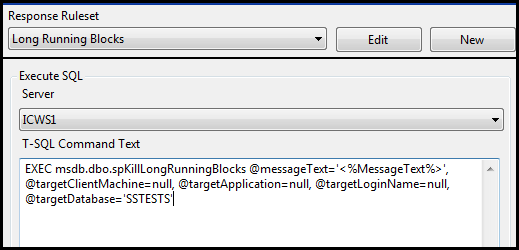

Next, enter the command to execute our block killing procedure. This is the same command discussed above in bold text, but altered to restrict by database. Note that my test database is called ‘SSTESTS’:

Enter the Command for the Execute SQL Action

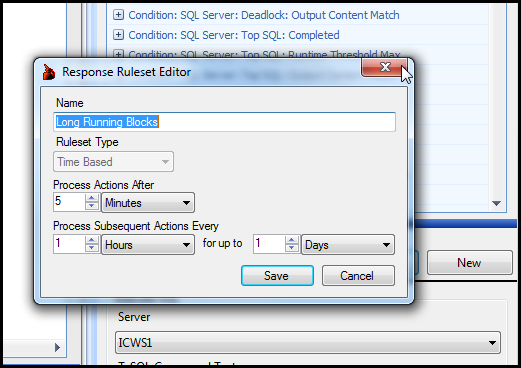

Now, create the response ruleset to only process messages after the block has been active for more than 5 minutes:

Create and Set the Time Based Response Ruleset

Conclusion

Now our solution is in place. Any time a block is detected, and continues blocking for more than 5 minutes, our stored procedure will be run killing only the process at the head of that block chain.

This solution takes advantage of some of the more advanced features that SQL Sentry has to offer, and is just one example of the many solutions that can be built using SQL Sentry and a little creativity.

Anyone that is interested in setting this up please just email support at sqlsentry.net and mention this blog entry. Even if you don’t already use SQL Sentry you can try this out using an evaluation copy from here.